Managing Open-Source Docker Images on Docker Hub using Travis

I was working on a small project recently to learn working with the Docker tool chain and get an idea about how things work. I decided to build (yet another) open-source Docker image of etcd and publish it on Docker Hub. Like all open-source projects, building a working Docker image was pretty straight forward and didn’t really take much time. What took more time was the process to publish it nicely that someone can find it useful. And in that process, I learned a few things about working with Docker, Docker Hub and Travis and have a few questions unanswered. So I thought it might be worth documenting my findings and those unanswered questions to get feedback and improve my understanding.

Laying out my requirements first:

- Supporting latest and a few older versions of an application seems like a common practice in the Docker community (and that makes sense). Supporting the common distributions and their slim versions also seems like a common practice. So this image should follow the same practices that the community does.

- Automatic sync of README on Github to the description on Docker Hub is a must. My assumption is that developers usually discover Docker images on Docker Hub itself. So just having a nice README on Github is not enough. The documentation has to be present on Docker Hub too. And I don’t want to write the docs in my git repository and then manually copy it to Docker Hub every time I make changes to it.

- The image itself should be very simple to use, both for running etcd as a server and as a CLI client. We are not going to talk about this topic because this is somewhat unrelated to this topic. But if it interests you, do checkout the README and give feedback by opening an issue in the Github project itself.

The first challenge was having an automated process to build new images and upload them to Docker Hub. There is a nice blog post that documents how to achieve this using Travis so that every push to the repository builds a new image and when a branch is merged, the built image is pushed to Docker Hub. This was fairly simple to set up.

But as simple as it is, it doesn’t fulfill any of my requirements. Let’s try to address them one by one.

Problem #1 — Automatically Syncing README

While the above build process on Travis can build and push images to Docker Hub, it fails to sync the README. The Docker CLI also does not have any support for updating the description on Docker Hub.

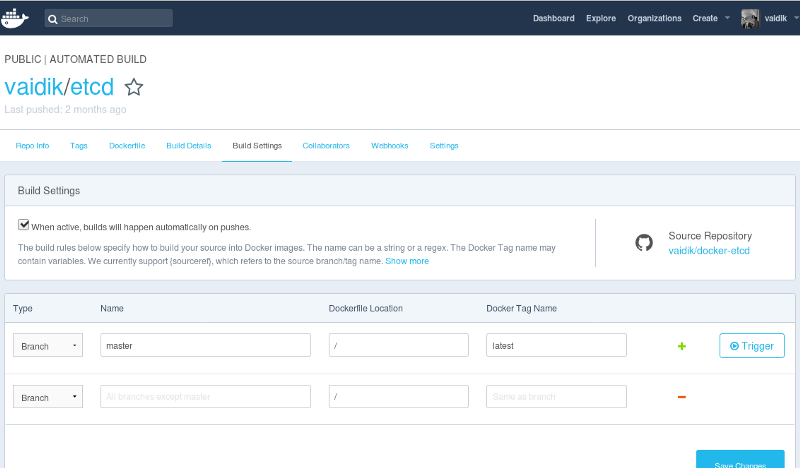

Docker Hub has something called Automated Builds which can be configured to produce similar results as our Travis job does. And, it can also pull contents of the README from the Github repo for using on Docker Hub as description. So we can use Automated Builds for building images and syncing the README from Github. This addresses one of our requirements.

How Automated Builds works is that we have to configure it on Docker Hub to specify the path to the Docker context (i.e. path to the Dockerfile), building the image from that Dockerfile and tagging it to a tag of your liking. This means for every image to be built in your repository, there has to be a Dockerfile present at a unique path. This will turn out to be a problem soon.

Problem #2 — Running Tests

While Automated Builds on Docker Hub solves the README syncing problem, there are some other problems with automated builds. You cannot use it as a tool to run tests and get tests status on PRs. There is simply no way to get feedback from Docker Hub’s build system to Github. This is critical for a good developer workflow.

So one thing is for sure — we need to go back to Travis for running tests and blocking PRs if builds fail.

Problem #3 —Building images for multiple versions

I wanted to build etcd not only for the latest version but also for the previous version and build all of those for multiple distributions.

Versions to support:

- 3.3 (latest)

- 3.2

Distributions to support:

- debian:stretch-slim

- alpine

So we had to build a total of 4 images — every version for every distribution.

First, let’s look at the multiple versions problem only.

I started checking out how other open-source Docker images achieve this. And I was not too pleased.

Look at the MySQL image’s Dockerfiles. Every docker image for each version of MySQL has a dedicated Dockerfile in separate “version directories” in the repository. And they hardly differ. See these two Dockerfiles of MySQL — Dockerfile for MySQL 5.6 and for MySQL 5.7. In 76 lines of code, they have only one line that is different from each other and that too has only their versions different.

I observed a very similar pattern in Nginx’s Dockerfiles as well. On average, only 5–10% lines were different in every Dockerfile for every version for every base image.

This seems like an enormous amount of code duplication. I didn’t want to do this because the only thing that differed in every image was the version and package management (Alpine and Debian use different package managers).

Dockerfile’s ARG can be used to simplify this. ARG can be used to define variables that can be set at the time of building the image by passing the value to docker build command. This is super handy. Since the only difference in Dockerfiles for each version is the version itself in the URL, I could easily use this and build docker images for different versions using the same Dockerfile and the following command:

docker build . -t "3.3" --build-arg version=3.3docker build . -t "3.2" --build-arg version=3.2Using this in Travis with environment matrix, we can build Docker images automatically for multiple versions. See this snippet of .travis.yml for building multiple images for each version:language: bash

services: docker

env:

matrix:

- VERSION=3.3.1

- VERSION=3.2.19

script:

- docker build . -t "$VERSION" --build-arg version=$VERSION

This solves the multiple images for multiple versions problem. But we still have to address building images for each base image for each version as well.

Problem #4 — Building for multiple base images

Very similar to the problem of building images for multiple versions, we have the problem of building images for multiple base images. But just using ARGs would not be enough.

One additional challenge in this case is that every distribution might have its own specifics of how you do things, for example package management. Alpine and Debian use different package managers. So just using ARGs would not be enough to handle all the changes. We also need to manage how we install packages. In this case, we need a package for installation but not for running etcd. If we can somehow just get the etcd binary without anything extra, we can make the perfect Docker image.

Multi-Stage Docker Builds and the Builder Pattern

Docker’s Multi-Stage Builds is a slick way to cleanly build Docker images. Often in the build process, we install a lot of random packages. Cleanup can be a pain after you have successfully built your application.

One of the important things while building Docker images is to keep the size down. Some people write cleanup instructions using bash scripts. Some people keep development and production Dockerfiles separate, where the development Dockerfile yields a bloated image with everything that you need for development and the production Dockerfile yields a docker image which has only the necessary artifacts from the development Docker image. But both the approaches have their own bits of ugliness.

Multi-Stage builds simplifies some of this and gives you a way to organize your Dockerfiles better. You can add multiple stages in your Dockerfiles, building a completely separate Docker image for each stage and use additional constructs to make it simple for you to copy artifacts from one stage to another. See the bold line in this example from the docs on Docker Hub:

FROM golang:1.7.3WORKDIR /go/src/github.com/alexellis/href-counter/RUN go get -d -v golang.org/x/net/html COPY app.go .RUN CGO_ENABLED=0 GOOS=linux go build -a -installsuffix cgo -o app .FROM alpine:latest RUN apk --no-cache add ca-certificatesWORKDIR /root/COPY --from=0 /go/src/github.com/alexellis/href-counter/app .CMD ["./app"]Firstly, notice that this Dockerifle has multiple FROM instructions. Each instruction denotes a different build stage, resulting into a completely different image. The COPY instruction takes an argument called --from which can be used to copy files from other build stages. Use this to copy only what you want in your final image and leave behind everything that you don’t need.

Coupling Multi-Stage builds with ARG can solve our problem. We can now take both version and base_image, and use base_image with the FROM instruction to choose the base image at build time.FROM debian:stretch-slim

...

FROM "$base_image"

...

COPY --from=0 /path/to/etcd-binary /usr/local/bin

CMD ["etcd"]

And then run the following commands to build all our images:

# Version 3.3 on debian:stretch-slimdocker build . -t "3.3" --build-arg version=3.3 \ --build-arg base_image="debian:stretch-slim"# Version 3.3 on alpine:latestdocker build . -t "3.3:alpine" --build-arg version=3.3 \ --build-arg base_image="# Version 3.2 on debian:stretch-slimdocker build . -t "3.2" --build-arg version=3.2 \ --build-arg base_image="debian:stretch-slim"# Version 3.2 on alpine:latestdocker build . -t "3.2:alpine" --build-arg version=3.2 \ --build-arg base_image="See the full Dockerfile here.

This solves our problem of building the image for multiple versions, for multiple base images without duplicating code at all.

Multi-Stage Builds has some cool features. I recommend you check that out for a better Docker experience.

With this, we can build all our images on Travis by providing different environments in the environment build matrix, letting us build the image, run tests and push the built images to Docker Hub on merging to master for each of those environments. Here is a sample .travis.yml file (extension of our previous example):language: bash

services: docker

env:

matrix:

- VERSION=3.3.1 BASE_IMAGE=debian:stretch-slim

- VERSION=3.2.19 BASE_IMAGE=debian:stretch-slim

- VERSION=3.3.1 BASE_IMAGE=alpine

- VERSION=3.2.19 BASE_IMAGE=alpine

script:

- | docker build . -t "$VERSION" \ --build-arg version=$VERSION \ --build-arg base_image="$BASE_IMAGE"

But the problem of automatic syncing of README still remains, and seems kind of impossible to do via Travis. Let’s see what we can do about that.

Problem #5 — Tying it all together

So here we are with the following progress:

- We know how to build Docker images for each version and each base image. We can do this in manageable way without duplicating code. And we can build this on Travis.

- We can build images on Travis and push them to Docker. But we won’t get our README on Docker Hub.

- We can build images on Docker Hub using Automated Builds and have our README sync to Docker Hub. But we cannot build images for every pull request on Github and block merging pull requests if the build fails. There is just no feedback to Github.

So while we can use Travis for building all our images and running tests for every PR, we cannot use it for pushing images. And the opposite is true for Docker Hub. In fact, Docker Hub’s Automated Build system in its simplest form cannot be used in our ARGs based setup because Automated Builds work with Docker context i.e. they build images for “every specified path” in the build settings and expects a Dockerfile present there. You cannot pass CLI arguments in this kind of a setup. So our custom docker build commands would not work with Automated Builds.

Custom Build Phase Hooks for Automated Builds on Docker Hub

Custom Build Phase Hooks allow you to do more advanced customization of Automated Builds by allowing you to execute custom scripts in different build phases. For example, you can override the build phase to pass extra arguments to docker build or you can override the push phase to push to multiple repositories. And of course, there is a lot more that you can do.

This finally solves all our problems:

- We can use our Travis setup for building and running tests for every PR but not push images to Docker Hub.

- We can use Automated Builds with Custom Phase Hooks on

masterbranch only. This will push our images to Docker Hub and also sync the README from Github.

The build hook scripts are super generic. I have written them in such a way that I use the same build script for building our images in Travis — DRY FTW!

You can checkout the entire setup here:

Conclusion

No code duplication, easy process for building, good development experience and automatic syncing of the README — this really works for me and I am extremely satisfied with the setup at the end.

Some might say that using both Docker Automated Build and Travis might be hard to maintain. There can be a work around to pushing PR status to Github from the custom build hooks but that is not something that I have explored so far and it also looks like a lot more work as compared to maintaining Travis alongside Automated Builds on Docker Hub.

One thing that I am not sure of though is my approach to building multiple Docker images using ARG and Multi-Stage Builds. While it works for me, my experience so far in this space is limited and I would like to get feedback. What do you think? Is this a better way for building and distributing open-source Docker images? Please let me know what you think through the comments section.